Ceci est une ancienne révision du document !

Practical session for Astrosim 2017

Practical work support for Astrosim 2017

5 W/2H : Why ? What ? Where ? When ? Who ? How much ? How ?

5W/2H : CQQCOQP (Comment ? Quoi ? Qui, Combien ? Où ? Quand ? Pourquoi ?) in french…

- Why ? Have a look to parallelism on machines and improve investigations process

- What ? Test with dummie examples

- When ? Friday, the 30th of June in the afternoon

- How much ? Nothing, Blaise Pascal Center provides workstations & cluster nodes

- Where ? On workstations, cluster nodes, laptop (well configured), inside terminals

- Who ? For people who want to open the front

- How ? Applying some simples commands

Session Goal

It's to illustrate the relations between parallel hardware architectures and parallelized implementations of applications.

Starting the session

Prerequisites hardware, software and humanware

In order to get a complete functional environment, Blaise Pascal Center provides hardware, software, and OS well designed. People who want to achieve this practical session on their own laptop must have a real Unix Operating System.

Prerequisite for hardware

Prerequisite for software

- Open graphical session on one workstation

- Open four terminals

Prerequisite for humanware

- An allergy to command line will severely restrict the range of this practical session.

Investigate Hardware

What inside my host ?

Hardware in computing science is defined by Von Neumann architecture:

- CPU (Central Processing Unit) with CU (Control Unit) and ALU (Arithmetic and Logic Unit)

- MU (Memory Unit)

- Input and Output Devices

First property of hardware is limited resources.

In Posix systems, everything is file. So you can retreive informations (or set configurations) by classical file commands inside a terminal. For example cat /proc/cpuinfo returns information about processor.

On hd6450alpha, the less powerfull workstation in CBP, cat /proc/cpuinfo returns:

processor : 0 vendor_id : GenuineIntel cpu family : 15 model : 6 model name : Intel(R) Pentium(R) D CPU 3.40GHz stepping : 4 microcode : 0x4 cpu MHz : 3388.919 cache size : 2048 KB physical id : 0 siblings : 2 core id : 0 cpu cores : 2 apicid : 0 initial apicid : 0 fpu : yes fpu_exception : yes cpuid level : 6 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx lm constant_tsc pebs bts nopl eagerfpu pni dtes64 monitor ds_cpl est cid cx16 xtpr pdcm lahf_lm bugs : bogomips : 6777.83 clflush size : 64 cache_alignment : 128 address sizes : 36 bits physical, 48 bits virtual power management: processor : 1 vendor_id : GenuineIntel cpu family : 15 model : 6 model name : Intel(R) Pentium(R) D CPU 3.40GHz stepping : 4 microcode : 0x4 cpu MHz : 3388.919 cache size : 2048 KB physical id : 0 siblings : 2 core id : 1 cpu cores : 2 apicid : 1 initial apicid : 1 fpu : yes fpu_exception : yes cpuid level : 6 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx lm constant_tsc pebs bts nopl eagerfpu pni dtes64 monitor ds_cpl est cid cx16 xtpr pdcm lahf_lm bugs : bogomips : 6778.13 clflush size : 64 cache_alignment : 128 address sizes : 36 bits physical, 48 bits virtual power management:

This command provides lots of informations (54 lines) on computing capabilities. Several are physical ones (number of cores, size of caches, frequency), logical ones.

The command lscpu provides a more compact informations:

Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 2 On-line CPU(s) list: 0,1 Thread(s) per core: 1 Core(s) per socket: 2 Socket(s): 1 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 15 Model: 6 Model name: Intel(R) Pentium(R) D CPU 3.40GHz Stepping: 4 CPU MHz: 3388.919 BogoMIPS: 6777.83 L1d cache: 16K L2 cache: 2048K NUMA node0 CPU(s): 0,1 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx lm constant_tsc pebs bts nopl eagerfpu pni dtes64 monitor ds_cpl est cid cx16 xtpr pdcm lahf_lm

Question #1: get this informations on your host with ''cat /proc/cpuinfo'' and compare to one above

- How much lines of informations ?

Question #2 : get the informations on your host with ''lscpu'' command

- What new informations appear on the output ?

- How many CPUs ? Threads per core ? Cores per socket ? Sockets ?

- How many cache levels ?

- How many “flags” ?

Exploration

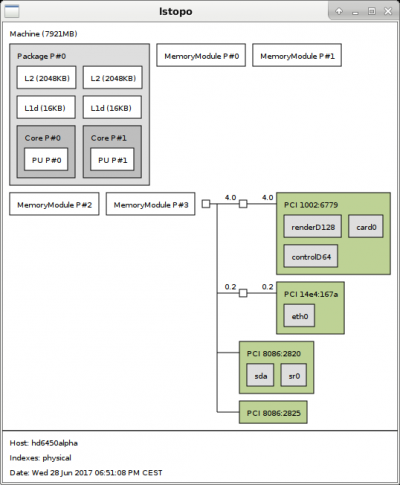

Some hardware libraries provides you a graphical view of hardware system, including peripherals. The command hwloc-ls from hwloc library offers this output:

Question #3 : get a graphical representation of hardware with ''hwloc-ls'' command

- Locate and identify the elements provided with

lscpucommand - How much memory does your host hold ?

The peripherals are listed and prefixed by PCI. The command lspci -nn provides the list of PCI devices:

00:00.0 Host bridge [0600]: Intel Corporation 82Q963/Q965 Memory Controller Hub [8086:2990] (rev 02) 00:01.0 PCI bridge [0604]: Intel Corporation 82Q963/Q965 PCI Express Root Port [8086:2991] (rev 02) 00:1a.0 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB UHCI Controller #4 [8086:2834] (rev 02) 00:1a.1 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB UHCI Controller #5 [8086:2835] (rev 02) 00:1a.7 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB2 EHCI Controller #2 [8086:283a] (rev 02) 00:1b.0 Audio device [0403]: Intel Corporation 82801H (ICH8 Family) HD Audio Controller [8086:284b] (rev 02) 00:1c.0 PCI bridge [0604]: Intel Corporation 82801H (ICH8 Family) PCI Express Port 1 [8086:283f] (rev 02) 00:1c.4 PCI bridge [0604]: Intel Corporation 82801H (ICH8 Family) PCI Express Port 5 [8086:2847] (rev 02) 00:1d.0 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB UHCI Controller #1 [8086:2830] (rev 02) 00:1d.1 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB UHCI Controller #2 [8086:2831] (rev 02) 00:1d.2 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB UHCI Controller #3 [8086:2832] (rev 02) 00:1d.7 USB controller [0c03]: Intel Corporation 82801H (ICH8 Family) USB2 EHCI Controller #1 [8086:2836] (rev 02) 00:1e.0 PCI bridge [0604]: Intel Corporation 82801 PCI Bridge [8086:244e] (rev f2) 00:1f.0 ISA bridge [0601]: Intel Corporation 82801HB/HR (ICH8/R) LPC Interface Controller [8086:2810] (rev 02) 00:1f.2 IDE interface [0101]: Intel Corporation 82801H (ICH8 Family) 4 port SATA Controller [IDE mode] [8086:2820] (rev 02) 00:1f.3 SMBus [0c05]: Intel Corporation 82801H (ICH8 Family) SMBus Controller [8086:283e] (rev 02) 00:1f.5 IDE interface [0101]: Intel Corporation 82801HR/HO/HH (ICH8R/DO/DH) 2 port SATA Controller [IDE mode] [8086:2825] (rev 02) 01:00.0 VGA compatible controller [0300]: Advanced Micro Devices, Inc. [AMD/ATI] Caicos [Radeon HD 6450/7450/8450 / R5 230 OEM] [1002:6779] 01:00.1 Audio device [0403]: Advanced Micro Devices, Inc. [AMD/ATI] Caicos HDMI Audio [Radeon HD 6450 / 7450/8450/8490 OEM / R5 230/235/235X OEM] [1002:a... 03:00.0 Ethernet controller [0200]: Broadcom Limited NetXtreme BCM5754 Gigabit Ethernet PCI Express [14e4:167a] (rev 02)

Question #4 : list the PCI peripherals with ''lspci'' command

- How many devices do you get ?

- Can you identify the devices listed with graphical representation ?

- What keywords on graphical representation define the VGA device ?

Exploring dynamic system

Your hosts run a GNU/Linux operating system based on Debian Stretch distribution.

As when your drive a car, it's useful to get informations about running system during process. The commands top and htop

Tiny metrology with ''/usr/bin/time''

time included as command in shells and time as standalone program. In order not to get difficulties, the program time has to be resquested by /usr/bin/time!

Introduction & Redefinition of metrology arguments

Difference between time build in command and time standalone program.

time (date ; sleep 10 ; date)

Thu Jun 29 09:15:53 CEST 2017 Thu Jun 29 09:16:03 CEST 2017 real 0m10.012s user 0m0.000s sys 0m0.000s

/usr/bin/time bash -c 'date ; sleep 10 ; date'

Thu Jun 29 09:18:51 CEST 2017 Thu Jun 29 09:19:01 CEST 2017 0.00user 0.00system 0:10.01elapsed 0%CPU (0avgtext+0avgdata 2984maxresident)k 0inputs+0outputs (0major+481minor)pagefaults 0swaps

Have a close eye to the difference of the syntax if you would like to get metrology on a sequence of commands: a prefix by bash -c and the quotes on the boundaries are needed.

The defaut output of /usr/bin/time is more verbose but not easily to parse. It's better to define the output with TIME variable. Copy/paste the following in terminal:

export TIME='TIME Command being timed: "%C" TIME User time (seconds): %U TIME System time (seconds): %S TIME Elapsed (wall clock) time : %e TIME Percent of CPU this job got: %P TIME Average shared text size (kbytes): %X TIME Average unshared data size (kbytes): %D TIME Average stack size (kbytes): %p TIME Average total size (kbytes): %K TIME Maximum resident set size (kbytes): %M TIME Average resident set size (kbytes): %t TIME Major (requiring I/O) page faults: %F TIME Minor (reclaiming a frame) page faults: %R TIME Voluntary context switches: %w TIME Involuntary context switches: %c TIME Swaps: %W TIME File system inputs: %I TIME File system outputs: %O TIME Socket messages sent: %s TIME Socket messages received: %r TIME Signals delivered: %k TIME Page size (bytes): %Z TIME Exit status: %x'

echo $TIME

TIME Command being timed: "%C" TIME User time (seconds): %U TIME System time (seconds): %S TIME Elapsed (wall clock) time : %e TIME Percent of CPU this job got: %P TIME Average shared text size (kbytes): %X TIME Average unshared data size (kbytes): %D TIME Average stack size (kbytes): %p TIME Average total size (kbytes): %K TIME Maximum resident set size (kbytes): %M TIME Average resident set size (kbytes): %t TIME Major (requiring I/O) page faults: %F TIME Minor (reclaiming a frame) page faults: %R TIME Voluntary context switches: %w TIME Involuntary context switches: %c TIME Swaps: %W TIME File system inputs: %I TIME File system outputs: %O TIME Socket messages sent: %s TIME Socket messages received: %r TIME Signals delivered: %k TIME Page size (bytes): %Z TIME Exit status: %x

For the execution line above, we got something like:

Thu Jun 29 09:32:34 CEST 2017 Thu Jun 29 09:32:44 CEST 2017 TIME Command being timed: "bash -c date ; sleep 10 ; date" TIME User time (seconds): 0.00 TIME System time (seconds): 0.00 TIME Elapsed (wall clock) time : 10.01 TIME Percent of CPU this job got: 0% TIME Average shared text size (kbytes): 0 TIME Average unshared data size (kbytes): 0 TIME Average stack size (kbytes): 0 TIME Average total size (kbytes): 0 TIME Maximum resident set size (kbytes): 3072 TIME Average resident set size (kbytes): 0 TIME Major (requiring I/O) page faults: 0 TIME Minor (reclaiming a frame) page faults: 488 TIME Voluntary context switches: 32 TIME Involuntary context switches: 4 TIME Swaps: 0 TIME File system inputs: 0 TIME File system outputs: 0 TIME Socket messages sent: 0 TIME Socket messages received: 0 TIME Signals delivered: 0 TIME Page size (bytes): 4096 TIME Exit status: 0

Statistics on the fly ! Penstacle of statistics

R project is a complete and extended software for statistics.

minimum: the best (in time) or the worst (in performance)maximum: the worst (in time) or the best (in performance)average: the classical metric used (but not the best on computing dynamic systems)median: the best metric on a set of experimentsstddevor standard deviation:

- variability is defined as the ratio between median and standard deviation

The tool /tmp/Rmmmms-$USER.r estimates the penstacle of statistics and adds on the rightest column the variability on a standard input stream.

To create /tmp/Rmmmms-$USER.r, copy/paste following lines in a terminal.

tee /tmp/Rmmmms-$USER.r <<EOF

#! /usr/bin/env Rscript

d<-scan("stdin", quiet=TRUE)

cat(min(d), max(d), median(d), mean(d), sd(d), sd(d)/median(d), sep="\t")

cat("\n")

EOF

chmod u+x /tmp/Rmmmms-$USER.r

To evaluate the variability to MemCopy test memory on 10 launches with a size of 1GB, the command is:

mbw -a -t 0 -n 10 1000

Here is an example of output:

Long uses 8 bytes. Allocating 2*131072000 elements = 2097152000 bytes of memory. Getting down to business... Doing 10 runs per test. 0 Method: MEMCPY Elapsed: 0.17240 MiB: 1000.00000 Copy: 5800.430 MiB/s 1 Method: MEMCPY Elapsed: 0.17239 MiB: 1000.00000 Copy: 5800.700 MiB/s 2 Method: MEMCPY Elapsed: 0.17320 MiB: 1000.00000 Copy: 5773.672 MiB/s 3 Method: MEMCPY Elapsed: 0.17304 MiB: 1000.00000 Copy: 5779.044 MiB/s 4 Method: MEMCPY Elapsed: 0.17311 MiB: 1000.00000 Copy: 5776.741 MiB/s 5 Method: MEMCPY Elapsed: 0.17315 MiB: 1000.00000 Copy: 5775.473 MiB/s 6 Method: MEMCPY Elapsed: 0.17337 MiB: 1000.00000 Copy: 5767.911 MiB/s 7 Method: MEMCPY Elapsed: 0.17429 MiB: 1000.00000 Copy: 5737.531 MiB/s 8 Method: MEMCPY Elapsed: 0.17365 MiB: 1000.00000 Copy: 5758.776 MiB/s 9 Method: MEMCPY Elapsed: 0.17327 MiB: 1000.00000 Copy: 5771.240 MiB/s

To filter and extract statistics on the fly:

mbw -a -t 0 -n 10 1000 | grep MEMCPY | awk '{ print $9 }' | /tmp/Rmmmms-$USER.r

Here is the output

5595.783 5673.179 5624.503 5625.749 21.81671 0.003878869

This will be very useful to extract and provides statistics of times.

First steps in parallelism

Before exploration, check the instrumentation !

An illustrative example: Pi Dart Dash

Principle, inputs & outputs

The most common example of Monte Carlo program: estimate Pi number by the ratio between the number of points located in the quarter of a circle where random points are uniformly distributed. It needs:

- a random number generator for the 2 coordonates: pseudo-random number generators are generally based on logic and arithmetic functions on integer.

- operators of estimate the square of the 2 numbers and their sum

- a comparator

The input & output are the simplest one:

- Input: an integer as number of iterations

- Output: an integer as number of points inside the quarter of circle

- Output (bis): an estimation of Pi number (very inefficient method but the result is well known, so easy checked).

The following implementation is as bash shell script one. The RANDOM command provides a random number between 0 and 32767. So the frontier is located on 32767*32767.

Copy/Paste the following block inside a terminal.

tee /tmp/PiMC-$USER.sh <<EOF #!/bin/bash if [ -z "\$1" ] then echo "Please provide a number of iterations!" exit fi INSIDE=0 THEONE=\$((32767**2)) ITERATION=0 while [ \$ITERATION -lt \$1 ] do X=\$((RANDOM)) Y=\$((RANDOM)) if [ \$((\$X*\$X+\$Y*\$Y)) -le \$THEONE ] then INSIDE=\$((\$INSIDE+1)) fi ITERATION=\$((\$ITERATION+1)) done echo Pi \$(echo 4.*\$INSIDE/\$ITERATION | bc -l) echo Inside \$INSIDE echo Iterations \$1 EOF chmod u+x /tmp/PiMC-$USER.sh

A program name PiMC-$USER.sh located in /tmp where $USER is your login is created and ready to use.

Question #?: launch ''PiMC'' program with several number of iterations: from 100 to 10000000

Question #?: launch ''PiMC'' program prefixed by ''/usr/bin/time'' with several number of iterations: 100 to 1000000

Split the execution in equal parts

The following command line divides the job to do (10000000 iterations) into PR equal jobs.

- Define the number of iterations of each job

- Launch sequentially jobs with the association of

seqand xargs

ITERATIONS=1000000 PR=1 EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1))) seq $PR | /usr/bin/time xargs -I PR /tmp/PiMC-$USER.sh $EACHJOB PR 2>&1 | grep -v timed | egrep '(Pi|Inside|Iterations|time)'

Example of execution on 32 coresHT

Pi 3.14100400000000000000 Inside 785251 Iterations 1000000 TIME User time (seconds): 30.32 TIME System time (seconds): 0.08 TIME Elapsed (wall clock) time : 30.43

On the previous launch, User time represents 99.6% of Elapsed time. Internal system operations only 0.4%.

Replace the PR set as 1 by the detected number of CPU with lspcu command).

ITERATIONS=1000000

PR=$(lscpu | grep '^CPU(s):' | awk '{ print $NF }')

EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1)))

seq $PR | /usr/bin/time xargs -I '{}' /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep -v timed | egrep '(Pi|Inside|Iterations|time)'

On a bi-socket workstation with 8-cores CPU and Hyper Threading acivated, 32 CPUs are detected

Pi 3.14073600000000000000 Inside 24537 Iterations 31250 Pi 3.14073600000000000000 Inside 24537 Iterations 31250 Pi 3.12870400000000000000 Inside 24443 Iterations 31250 Pi 3.11910400000000000000 Inside 24368 Iterations 31250 Pi 3.11667200000000000000 Inside 24349 Iterations 31250 Pi 3.13625600000000000000 Inside 24502 Iterations 31250 Pi 3.14176000000000000000 Inside 24545 Iterations 31250 Pi 3.13254400000000000000 Inside 24473 Iterations 31250 Pi 3.14496000000000000000 Inside 24570 Iterations 31250 Pi 3.12960000000000000000 Inside 24450 Iterations 31250 Pi 3.12140800000000000000 Inside 24386 Iterations 31250 Pi 3.13587200000000000000 Inside 24499 Iterations 31250 Pi 3.14880000000000000000 Inside 24600 Iterations 31250 Pi 3.12870400000000000000 Inside 24443 Iterations 31250 Pi 3.14368000000000000000 Inside 24560 Iterations 31250 Pi 3.13945600000000000000 Inside 24527 Iterations 31250 Pi 3.13203200000000000000 Inside 24469 Iterations 31250 Pi 3.14803200000000000000 Inside 24594 Iterations 31250 Pi 3.14688000000000000000 Inside 24585 Iterations 31250 Pi 3.14368000000000000000 Inside 24560 Iterations 31250 Pi 3.13305600000000000000 Inside 24477 Iterations 31250 Pi 3.15276800000000000000 Inside 24631 Iterations 31250 Pi 3.14931200000000000000 Inside 24604 Iterations 31250 Pi 3.15072000000000000000 Inside 24615 Iterations 31250 Pi 3.14265600000000000000 Inside 24552 Iterations 31250 Pi 3.14790400000000000000 Inside 24593 Iterations 31250 Pi 3.14572800000000000000 Inside 24576 Iterations 31250 Pi 3.14496000000000000000 Inside 24570 Iterations 31250 Pi 3.14240000000000000000 Inside 24550 Iterations 31250 Pi 3.12908800000000000000 Inside 24446 Iterations 31250 Pi 3.13344000000000000000 Inside 24480 Iterations 31250 Pi 3.12755200000000000000 Inside 24434 Iterations 31250 TIME User time (seconds): 32.56 TIME System time (seconds): 0.12 TIME Elapsed (wall clock) time : 33.05

In this example, we see that the User time represents 98.52% of the Elapsed time. The total Elapsed time is greater up to 10% to unsplitted one. So, splitting has a cost. The system time represents 0.4% of Elapsed time.

Replace the end of the program to extract the total Inside number of iterations.

ITERATIONS=1000000

PR=$(lscpu | grep '^CPU(s):' | awk '{ print $NF }')

EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1)))

seq $PR | /usr/bin/time xargs -I '{}' /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep ^Inside | awk '{ sum+=$2 } END { printf "Insides %i", sum }' ; echo

In this illustrative case, each job is independant to others. They can be distributed to all the computing resources available. xargs command line builder do it for you with -P <ConcurrentProcess>.

So, the previous command becomes

ITERATIONS=1000000

PR=$(lscpu | grep '^CPU(s):' | awk '{ print $NF }')

EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1)))

seq $PR | /usr/bin/time xargs -I '{}' -P $PR /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep -v timed | egrep '(Pi|Inside|Iterations|time)'

Pi 3.13843200000000000000 Inside 24519 Iterations 31250 Pi 3.14688000000000000000 Inside 24585 Iterations 31250 Pi 3.15686400000000000000 Inside 24663 Iterations 31250 Pi 3.14508800000000000000 Inside 24571 Iterations 31250 Pi 3.14572800000000000000 Inside 24576 Iterations 31250 Pi 3.15174400000000000000 Inside 24623 Iterations 31250 Pi 3.14547200000000000000 Inside 24574 Iterations 31250 Pi 3.12972800000000000000 Inside 24451 Iterations 31250 Pi 3.14688000000000000000 Inside 24585 Iterations 31250 Pi 3.14521600000000000000 Inside 24572 Iterations 31250 Pi 3.13740800000000000000 Inside 24511 Iterations 31250 Pi 3.14316800000000000000 Inside 24556 Iterations 31250 Pi 3.16147200000000000000 Inside 24699 Iterations 31250 Pi 3.12665600000000000000 Inside 24427 Iterations 31250 Pi 3.13625600000000000000 Inside 24502 Iterations 31250 Pi 3.14496000000000000000 Inside 24570 Iterations 31250 Pi 3.14163200000000000000 Inside 24544 Iterations 31250 Pi 3.13510400000000000000 Inside 24493 Iterations 31250 Pi 3.13830400000000000000 Inside 24518 Iterations 31250 Pi 3.14419200000000000000 Inside 24564 Iterations 31250 Pi 3.14035200000000000000 Inside 24534 Iterations 31250 Pi 3.14624000000000000000 Inside 24580 Iterations 31250 Pi 3.13190400000000000000 Inside 24468 Iterations 31250 Pi 3.15097600000000000000 Inside 24617 Iterations 31250 Pi 3.15494400000000000000 Inside 24648 Iterations 31250 Pi 3.13817600000000000000 Inside 24517 Iterations 31250 Pi 3.14547200000000000000 Inside 24574 Iterations 31250 Pi 3.15814400000000000000 Inside 24673 Iterations 31250 Pi 3.13459200000000000000 Inside 24489 Iterations 31250 Pi 3.12985600000000000000 Inside 24452 Iterations 31250 Pi 3.15238400000000000000 Inside 24628 Iterations 31250 Pi 3.15072000000000000000 Inside 24615 Iterations 31250 TIME User time (seconds): 59.52 TIME System time (seconds): 0.16 TIME Elapsed (wall clock) time : 2.06

The total User time jumped from 32 to 59 seconds (+83%)! But Elapsed time is reduced from 33.05 to 2.06 (-84%). The System time represents 7% of Elapsed time.

In conclusion, splitting a huge job into small jobs has a Operating System cost. But distribute the jobs using system can very efficient to reduce Elapsed time.

We can improve statistics by launching 10x the previous program. We storage the different 'time' estimators inside a logfile named as Ouput_PiMC-$USER_YYYYmmddHHMM.log

ITERATIONS=1000000

PR=$(lscpu | grep '^CPU(s):' | awk '{ print $NF }')

EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1)))

LOGFILE=/tmp/$(basename /tmp/PiMC-$USER .sh)_$(date '+%Y%m%d%H%M').log

seq 10 | while read ITEM

do

seq $PR | /usr/bin/time xargs -I '{}' -P $PR /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep -v timed | egrep '(time)'

done > $LOGFILE

echo Results stored in $LOGFILE

TIME User time (seconds): 59.81 TIME System time (seconds): 0.14 TIME Elapsed (wall clock) time : 2.02 TIME User time (seconds): 59.38 TIME System time (seconds): 0.10 TIME Elapsed (wall clock) time : 1.96 TIME User time (seconds): 59.20 TIME System time (seconds): 0.22 TIME Elapsed (wall clock) time : 1.97 TIME User time (seconds): 59.50 TIME System time (seconds): 0.09 TIME Elapsed (wall clock) time : 1.98 TIME User time (seconds): 59.37 TIME System time (seconds): 0.14 TIME Elapsed (wall clock) time : 1.97 TIME User time (seconds): 59.61 TIME System time (seconds): 0.16 TIME Elapsed (wall clock) time : 2.01 TIME User time (seconds): 59.12 TIME System time (seconds): 0.16 TIME Elapsed (wall clock) time : 2.00 TIME User time (seconds): 59.70 TIME System time (seconds): 0.12 TIME Elapsed (wall clock) time : 1.99 TIME User time (seconds): 59.34 TIME System time (seconds): 0.14 TIME Elapsed (wall clock) time : 1.99 TIME User time (seconds): 59.33 TIME System time (seconds): 0.12 TIME Elapsed (wall clock) time : 1.98

With magic Rmmmms-$USER.r command, we can extract statistics on different times

- for Elapsed time :

cat /tmp/PiMC-jmylq_201706291231.log | grep Elapsed | awk '{ print $NF }' | /tmp/Rmmmms-$USER.r:1.96 2.02 1.985 1.987 0.01888562 0.009514167

- for System time :

cat /tmp/PiMC-jmylq_201706291231.log | grep System | awk '{ print $NF }' | /tmp/Rmmmms-$USER.r:0.09 0.22 0.14 0.139 0.03665151 0.2617965

- for User time :

cat /tmp/PiMC-jmylq_201706291231.log | grep User | awk '{ print $NF }' | /tmp/Rmmmms-$USER.r:59.12 59.81 59.375 59.436 0.2179297 0.003670394

The previous results show that the variability, in this cas, in

If we take 10x the previous number of iterations:

- With

PR=1:TIME User time (seconds): 313.36 TIME System time (seconds): 0.93 TIME Elapsed (wall clock) time : 314.40

- With

PR=32:TIME User time (seconds): 606.06 TIME System time (seconds): 1.65 TIME Elapsed (wall clock) time : 19.46

On the first half of cores: 0 to 15

ITERATIONS=10000000 ; PR=$(($(lscpu | grep '^CPU(s):' | awk '{ print $NF }')/2)) ; EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1))) ; seq $PR | /usr/bin/time hwloc-bind -p pu:0-15 xargs -I '{}' -P $PR /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep -v timed | egrep '(Pi|Inside|Iterations|time)'

On the second half of cores: 16 to 31

ITERATIONS=10000000 ; PR=$(($(lscpu | grep '^CPU(s):' | awk '{ print $NF }')*2)) ; EACHJOB=$([ $(($ITERATIONS % $PR)) == 0 ] && echo $(($ITERATIONS/$PR)) || echo $(($ITERATIONS/$PR+1))) ; seq $PR | /usr/bin/time hwloc-bind -p pu:16-31 xargs -I '{}' -P $PR /tmp/PiMC-$USER.sh $EACHJOB '{}' 2>&1 | grep -v timed | egrep '(Pi|Inside|Iterations|time)'

Why to much user time

HT Effect : why so much people desactivate…

Examples of codes

xGEMM NBody.py PiXPU.py

Choose your prefered parallel code

Improvment of statistics

Scalability law

Amdahl Law

Mylq Law